🚀 Welcome to my Blog

Here, I share my experiences and insights on various topics, covering technology strategy, cloud computing, and artificial intelligence. Stay tuned for regular updates!

Here, I share my experiences and insights on various topics, covering technology strategy, cloud computing, and artificial intelligence. Stay tuned for regular updates!

FraudGPT and WormGPT are often referred to as "Evil LLMs" (Large Language Models). They are generative AI tools sold on the dark web and cybercrime forums, specifically created to assist malicious actors with various automated tasks.

Here is a breakdown of what they are and what they do:

FraudGPT is a malicious chatbot explicitly designed for fraudulent activities. It is marketed toward cybercriminals who may lack coding or sophisticated social engineering skills.

Its primary capabilities include:

In short, it automates the creation of the content and materials needed to execute large-scale, personalized scams.

WormGPT is another powerful, dark-web-based AI tool that focuses heavily on high-level cyberattacks and coding.

It is particularly known for:

Both of these models strip away the security guardrails and ethical constraints found in public AI systems, effectively making sophisticated cybercrime easier and faster to execute. They are the reason cyber defense needs to shift from reactive to proactive, as mentioned in the Canvas.

Starting December 16, 2025, Meta will begin incorporating user chatbot conversations into its advertising ecosystem. According to The Wall Street Journal, the tech giant will analyze interactions with its AI chatbot to better personalize ads. Sensitive topics such as health, religion, and politics are excluded from this data collection, but everything else—from casual shopping queries to entertainment discussions—may become part of Meta’s massive advertising dataset.

This decision reflects Meta’s continued reliance on advertising revenue, which makes up the vast majority of its business. By integrating chatbot interactions, the company aims to capture intent-rich data that goes beyond likes, shares, and browsing patterns. For example, a user asking the chatbot about hiking trails or best running shoes could soon be served highly targeted sportswear ads.

Critics argue that this move raises significant privacy concerns. Even if Meta excludes sensitive categories, conversations with chatbots often feel more personal than standard browsing behavior. Privacy advocates worry that the blurred line between casual AI assistance and commercial surveillance will deepen user mistrust. Some regulators may step in to review whether such practices align with data protection laws, particularly in Europe under GDPR and in regions with emerging AI regulation.

Supporters, however, suggest that this could make ads more relevant and less intrusive. If personalization reduces irrelevant advertising noise, it may improve user experience while keeping Meta’s core business profitable. For Meta, this also marks a strategic step toward competing with Google’s intent-driven search ads and Amazon’s commerce-focused advertising.

Looking ahead, the long-term implications of this strategy will depend on execution and regulation. If Meta fails to strike a balance between personalization and privacy, the backlash could outweigh the benefits. But if managed responsibly, this approach may redefine how conversational AI shapes advertising in the future.

Large Language Models (LLMs) like GPT have transformed the AI landscape by generating coherent text, writing code, and even offering creative insights. However, their limitation lies in their scope: they operate only in the space of words and patterns, not in understanding real-world cause-and-effect. To push beyond these boundaries, major AI research labs are investing in “world models”—AI systems designed to simulate environments, reason about physical dynamics, and predict future outcomes.

Unlike LLMs, which are trained primarily on text corpora, world models integrate multimodal data—including video, 3D simulations, and environmental interactions. This allows them to “imagine” scenarios, test hypotheses, and develop a deeper sense of situational awareness. For example, in robotics, a world model could predict how a robot’s arm will move through space when picking up a fragile object, adjusting grip and motion in real-time.

The potential applications extend beyond robotics. In healthcare, world models could simulate how new drugs interact with biological systems before clinical trials. In climate science, they could model the complex interplay of weather, ocean currents, and emissions to evaluate intervention strategies. In finance, they might simulate market behavior under different economic shocks, helping institutions plan for volatility.

However, the rise of world models introduces new challenges. Alignment and safety concerns become more complex when AI is no longer just predicting the next word but actively simulating environments and testing strategies. If such models develop internal representations misaligned with reality—or worse, with human values—they could act in unpredictable or harmful ways. Researchers are therefore focused not only on technical advances but also on developing rigorous governance structures.

As Financial Times reports, the push toward world models may mark a crucial step on the path to Artificial General Intelligence (AGI). Whether this evolution leads to breakthroughs that benefit humanity—or new risks requiring careful regulation—depends on how responsibly the technology is developed and deployed.

In a recent statement, Microsoft’s AI leadership cautioned that within the next 5 to 10 years, advanced artificial intelligence systems could require “military-grade intervention” to prevent misuse or catastrophic failures. The warning underscores growing concerns among AI developers that rapid progress in AI capabilities may outpace society’s ability to regulate or contain them.

The remark, reported by Windows Central, highlights the dual nature of advanced AI: it promises enormous benefits but also carries unprecedented risks. Potential scenarios include rogue autonomous agents, cyberattacks powered by AI, or the development of AI systems so advanced that they operate beyond human oversight. These risks could necessitate new defense protocols similar to those used for nuclear technology, where containment and international cooperation are critical.

Some experts argue that this is a call to action for governments and international organizations. Rather than waiting for crises, they suggest establishing global AI oversight bodies, mandatory auditing standards, and safety benchmarks today. Others caution against alarmism, noting that predictions about AI “superintelligence” have been made before without materializing.

What sets this warning apart is that it comes from inside a leading AI company deeply involved in building state-of-the-art systems. The message appears less about exaggerating risks and more about urging proactive governance. The next decade could determine whether AI becomes a transformative tool for progress or a destabilizing force requiring extreme interventions.

Google DeepMind has released an update to its “Frontier Safety Framework,” the policy structure guiding the safe development of its most advanced AI systems. The framework outlines risk assessment protocols, red lines for deployment, and intervention measures to prevent unintended consequences. This latest update expands the framework to cover new risk domains and strengthens its assessment methodology.

The framework was first introduced in 2023 to ensure that the race toward frontier AI models—those approaching or exceeding human-level performance in some domains—does not outpace safety standards. The updated version considers new risk categories, such as large-scale disinformation, autonomous decision-making in sensitive areas, and the potential for emergent behaviors in multimodal systems.

Importantly, DeepMind emphasizes collaboration with external reviewers and regulators to validate its assessments. This shift reflects growing consensus in the AI field that companies cannot be the sole arbiters of safety for technologies with global impact. By opening parts of its process to independent scrutiny, DeepMind hopes to build public trust and set industry standards.

While critics argue that voluntary frameworks are insufficient without legally binding regulation, DeepMind’s effort is seen as a meaningful step toward responsible innovation. If widely adopted, such practices could create a baseline of accountability across the AI sector. As AI systems grow more powerful, frameworks like this may determine whether progress is sustainable—or risky.

Researchers at MIT have unveiled two major breakthroughs in AI-assisted materials science. First, a new platform called “CRESt” (Cross-Referencing Experimental Science and Text) can analyze vast scientific literature, run experiments, and propose novel materials. Second, MIT’s “SCIGEN” tool allows generative AI to design materials following specific rules, enabling the creation of compounds with desired properties.

The CRESt platform works by integrating knowledge from diverse sources—academic journals, patents, and experimental datasets—and then generating hypotheses for new materials. Unlike traditional research, which can take years of manual cross-referencing, CRESt automates much of the process and even suggests new experimental paths. Early results show promise in energy storage materials, semiconductors, and sustainable chemistry.

Meanwhile, SCIGEN takes generative AI beyond text and images by encoding design principles directly into its outputs. Instead of simply generating molecules at random, it follows scientific constraints to ensure that proposed materials have the right balance of stability, conductivity, or other properties. This makes it possible to accelerate discovery while reducing wasted effort in the lab.

Together, these breakthroughs represent a shift in how materials science is conducted. By coupling machine learning with traditional experimentation, scientists can compress decades of trial-and-error into months or even weeks. This could lead to breakthroughs in renewable energy, faster electronics, and new sustainable materials for construction and manufacturing.

While promising, challenges remain. AI systems still depend on the quality of their input data, and experimental validation remains essential. Researchers stress that these tools are designed to augment, not replace, human expertise. Still, the potential impact of CRESt and SCIGEN is vast: they may accelerate humanity’s ability to build the next generation of materials essential for progress.

Huawei has announced the creation of a new AI supernode cluster built entirely with domestic chipmaking technologies. The move is designed to reduce dependence on Nvidia and other Western suppliers amid ongoing U.S.–China trade tensions. Reported by the South China Morning Post, the development highlights China’s determination to achieve technological self-sufficiency in critical AI infrastructure.

Huawei’s supernode leverages advances in local semiconductor design and fabrication, including custom processors optimized for AI workloads. This step is particularly significant because high-performance chips like Nvidia’s A100 and H100 are currently restricted from export to China. By building alternatives domestically, Huawei aims to maintain competitiveness in AI training and inference at scale.

Analysts note that achieving full parity with Nvidia’s offerings remains challenging. Nvidia’s hardware benefits from years of ecosystem development, including optimized software frameworks like CUDA. However, Huawei’s push is less about immediate technical equivalence and more about long-term resilience. By controlling its hardware supply chain, China can mitigate the risks of sanctions and ensure continuity in its AI ambitions.

This development also has global implications. If Huawei’s approach proves viable, it could encourage other nations to pursue localized AI infrastructure, potentially leading to a more fragmented global AI ecosystem. While this may reduce dependency risks, it could also slow international collaboration and standardization.

Ultimately, Huawei’s move signals that AI is not only a technological race but also a geopolitical one. The ability to independently build and scale AI hardware may define national competitiveness in the decades ahead.

A new study shows that artificial intelligence can extract significant diagnostic insights from standard blood tests. Reported in ScienceDaily, the research demonstrates that combining routine bloodwork with AI analysis can predict outcomes in spinal cord injury patients, potentially transforming how simple lab data is used in healthcare.

The study trained AI models on large datasets of patient bloodwork, correlating biochemical markers with recovery outcomes. This allowed the system to identify subtle patterns invisible to human physicians. The results show that with just everyday lab results—no specialized or costly procedures—clinicians can gain early warning about complications and long-term recovery trajectories.

Beyond spinal cord injuries, the same principle could apply to many areas of medicine. For example, AI could flag early signs of organ decline, metabolic imbalances, or even neurological conditions. By repurposing routine tests already collected in hospitals worldwide, this approach could deliver predictive healthcare at minimal additional cost.

The promise is particularly strong in low-resource healthcare systems. Since bloodwork is widely available, adding AI analysis could dramatically improve care without requiring new infrastructure. Doctors could be empowered with decision-support tools that extend their diagnostic reach, ultimately leading to better patient outcomes.

Still, challenges remain. AI predictions must be validated across diverse populations, and ethical questions arise around false positives or over-reliance on automated systems. Yet the overall direction is clear: AI has the potential to transform humble blood tests into powerful diagnostic tools that democratize advanced healthcare.

At the G20 summit, UNESCO announced an ambitious initiative to strengthen AI capacity in Africa. The program aims to train 15,000 civil servants and 5,000 judicial personnel in the responsible use of artificial intelligence, with a focus on human rights, ethics, and inclusion. By prioritizing governance as much as technical capacity, UNESCO hopes to build sustainable AI ecosystems across the continent.

The initiative reflects growing awareness that AI adoption is not just a technical issue but also a societal one. In many African nations, rapid digitalization risks creating gaps between technology deployment and regulatory readiness. Training policymakers and judicial staff ensures that governance structures evolve alongside AI adoption, minimizing risks of misuse or exclusion.

UNESCO’s program will also emphasize localized AI development. By encouraging African researchers and institutions to adapt AI tools to their cultural and economic contexts, the initiative seeks to avoid one-size-fits-all solutions imported from abroad. This could empower local industries, strengthen trust, and create AI systems that reflect regional values.

Importantly, the initiative is rights-focused. UNESCO stresses the need to protect privacy, avoid discrimination, and ensure that AI benefits are equitably distributed. This aligns with global calls for ethical AI, but grounds them in Africa’s specific development challenges and opportunities.

If successful, the initiative could serve as a model for other regions. By treating AI as both a technological and social issue, UNESCO may demonstrate how international organizations can guide responsible AI adoption in ways that maximize opportunity while safeguarding rights.

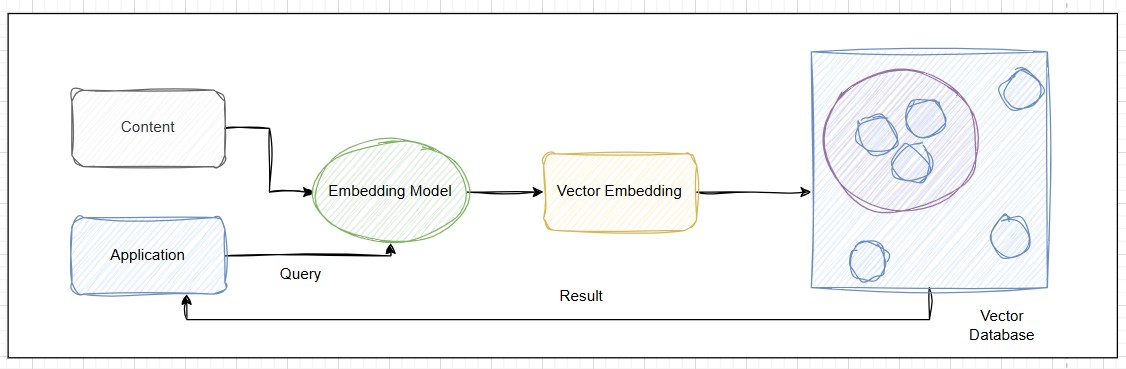

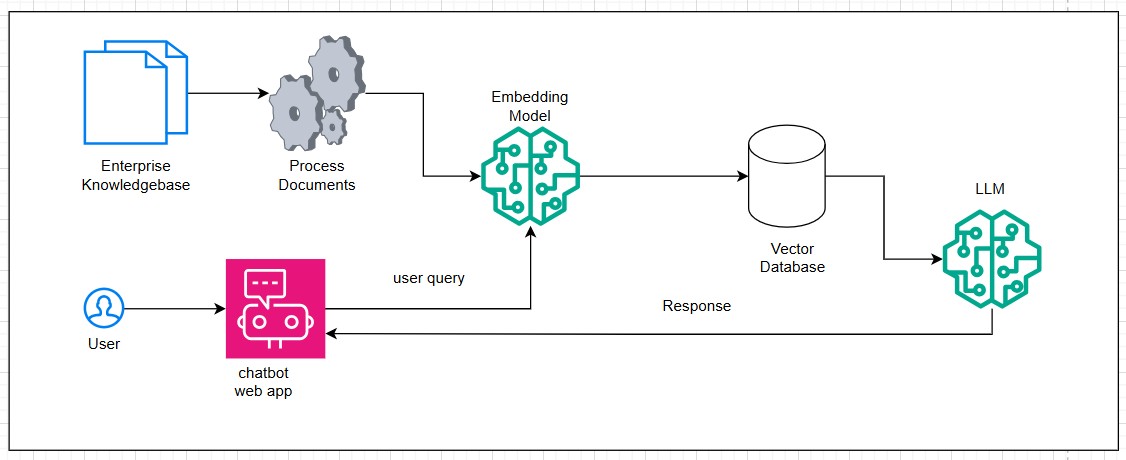

In today's AI-driven world, traditional databases are no longer sufficient for handling unstructured data such as images, videos, audio, and natural language. Enter the vector database—a new class of databases designed to store, index, and query vector embeddings, the numerical representations of unstructured data.

Vector databases power modern applications like semantic search, recommendation systems, fraud detection, and generative AI by enabling machines to "understand" similarity between data points, not just exact matches.

A vector is simply a list of numbers that represents data in a high-dimensional space.

For example, an AI model might convert a sentence like "I love pizza" into a 768-dimensional vector.

Similar sentences (e.g., "Pizza is great") will have vectors close to each other in this high-dimensional space.

This closeness allows us to measure semantic similarity using mathematical distance metrics.

A vector database stores embeddings and makes it easy to:

This enables Approximate Nearest Neighbor (ANN) Search, which finds the "closest" vectors quickly, even in billions of records.

Examples of popular solutions and libraries:

As unstructured data grows, vector databases solve search and retrieval bottlenecks by enabling semantic search and reasoning at scale. They bridge the gap between human-like understanding and machine processing, powering smarter, more context-aware applications.

Vector databases are becoming the backbone of AI-powered systems. By converting raw data into embeddings and enabling similarity-based retrieval, they unlock intelligent search, personalization, and contextual understanding. Expect hybrid systems—combining SQL-style queries with semantic vector queries—to reshape enterprise data architecture in the years ahead.

The integration of artificial intelligence into healthcare is transforming the industry at an unprecedented pace. From diagnostics to treatment personalization, AI is enabling healthcare providers to deliver more accurate, efficient, and accessible care. The potential for AI to revolutionize healthcare lies in its ability to process vast amounts of medical data, identify patterns invisible to the human eye, and support clinical decision-making.

One of the most significant applications of AI in healthcare is medical imaging analysis. AI algorithms can now detect anomalies in X-rays, MRIs, and CT scans with accuracy that often surpasses human radiologists. These systems can identify early signs of diseases like cancer, cardiovascular conditions, and neurological disorders, enabling earlier interventions and improved patient outcomes. The speed of AI analysis also means reduced waiting times for patients and decreased workload for healthcare professionals.

Beyond diagnostics, AI is revolutionizing drug discovery and development. Traditional drug development can take over a decade and cost billions of dollars. AI algorithms can analyze biological data to identify potential drug candidates, predict their effectiveness, and even simulate clinical trials, significantly accelerating the process. This capability became particularly evident during the COVID-19 pandemic when AI helped researchers identify existing drugs that could be repurposed to treat the virus.

Personalized medicine represents another frontier where AI is making substantial contributions. By analyzing a patient's genetic information, lifestyle factors, and medical history, AI systems can help physicians develop tailored treatment plans. This approach moves healthcare from a one-size-fits-all model to precision medicine, where interventions are customized to individual patients, potentially increasing treatment efficacy while reducing side effects.

Administrative tasks, which consume a significant portion of healthcare resources, are also being transformed by AI. Natural language processing algorithms can transcribe medical notes, code insurance claims, and manage patient scheduling more efficiently than human staff. This automation frees up healthcare professionals to focus on patient care rather than paperwork, potentially reducing burnout in an industry known for high stress levels.

Despite these advancements, the AI revolution in healthcare faces challenges. Data privacy concerns, regulatory hurdles, and the need for robust validation of AI systems are significant barriers. Additionally, there's the critical issue of ensuring that AI healthcare solutions don't perpetuate or amplify existing biases in medical data. As we move forward, collaboration between technologists, healthcare providers, regulators, and ethicists will be essential to harness AI's full potential while mitigating risks.

The future of AI in healthcare looks promising, with emerging applications in robotic surgery, virtual nursing assistants, and predictive analytics for population health management. As these technologies mature, they have the potential to make healthcare more proactive rather than reactive, shifting the focus from treatment to prevention. The AI revolution in healthcare is not about replacing human practitioners but augmenting their capabilities, ultimately leading to better patient outcomes and a more sustainable healthcare system.

The recent announcement of a stable 256-qubit quantum processor represents a monumental leap forward in quantum computing capabilities. This breakthrough brings us closer to achieving quantum advantage—the point where quantum computers can solve problems that are practically impossible for classical computers. The new processor demonstrates unprecedented coherence times and error correction capabilities, addressing two of the most significant challenges in quantum computing.

What makes this development particularly remarkable is the processor's ability to maintain quantum states for extended periods. Previous quantum systems struggled with decoherence, where quantum information would be lost due to environmental interference. The new architecture incorporates innovative error-correction techniques that protect quantum information, allowing for more complex calculations to be performed accurately. This stability is crucial for practical applications of quantum computing.

The implications of this breakthrough extend across multiple industries. In pharmaceuticals, quantum computers could simulate molecular interactions at an atomic level, dramatically accelerating drug discovery. Material scientists could design new compounds with specific properties, potentially leading to superconductors that work at room temperature or more efficient battery technologies. Financial institutions could optimize complex portfolios and risk models in ways currently impossible with classical computing.

Cryptography is another field that will be profoundly impacted. Current encryption methods rely on the difficulty of factoring large numbers, a task that would take classical computers thousands of years. Quantum computers, however, could break these encryptions in hours or days. This threat has spurred the development of quantum-resistant cryptography, and with this new processor, researchers can now test these new encryption methods under realistic conditions.

The road to this breakthrough wasn't easy. Researchers had to overcome significant technical hurdles, including maintaining extremely low temperatures (near absolute zero) and isolating qubits from environmental noise. The team developed a novel approach to qubit design that reduces interference while increasing connectivity between qubits. This enhanced connectivity allows for more complex quantum circuits to be built, essential for solving real-world problems.

Looking ahead, the research team is already working on scaling this technology to 512 and eventually 1024 qubits. Each doubling of qubit count represents an exponential increase in computational power. However, the challenge isn't just about adding more qubits—it's about maintaining or improving coherence times and error rates as the system scales. The architecture developed for this processor appears scalable, which is promising for future developments.

While we're still years away from quantum computers being commonplace, this breakthrough significantly shortens the timeline. It also demonstrates that the theoretical potential of quantum computing is gradually becoming practical reality. As these systems continue to improve, they'll likely work in tandem with classical computers, each handling the tasks they're best suited for. The quantum computing revolution is no longer a distant possibility—it's unfolding now, and this breakthrough marks a critical milestone on that journey.

The pandemic accelerated a remote work revolution that was already underway, but what comes next? As organizations settle into new working models, we're seeing the emergence of hybrid approaches that blend the best of remote and in-office work. The future of work isn't about choosing between fully remote or fully office-based—it's about creating flexible, adaptive systems that prioritize outcomes over presence.

Companies are increasingly recognizing that remote work isn't just a temporary solution but a fundamental shift in how we organize work. This realization is driving investments in digital infrastructure, collaboration tools, and management practices suited for distributed teams. The most successful organizations are those that view remote work not as a cost-saving measure but as an opportunity to access global talent, increase employee satisfaction, and build more resilient operations.

One of the most significant changes we're witnessing is the redefinition of the workplace itself. Instead of being a physical location where work happens, the workplace is becoming a network of connected spaces—home offices, co-working facilities, and company headquarters—each serving different purposes. This distributed model requires rethinking everything from team building to performance evaluation. Companies that succeed will be those that master the art of creating cohesion across distance.

Technology continues to play a crucial role in enabling effective remote work. Beyond video conferencing, we're seeing the rise of virtual office platforms that recreate the serendipitous interactions of physical spaces. AI-powered tools are helping managers identify when team members are struggling with isolation or burnout. Digital whiteboards and collaborative document editing have become sophisticated enough to rival their physical counterparts for many use cases.

The impact on urban planning and real estate is profound. As companies reduce their office footprints, city centers are evolving to serve new purposes. Suburban and rural areas are experiencing revitalization as knowledge workers move away from traditional business hubs. This geographic redistribution could help address issues of housing affordability and traffic congestion in major metropolitan areas while bringing economic opportunities to smaller communities.

However, the remote work revolution also presents challenges. Not all jobs can be performed remotely, potentially creating a new class divide between remote-capable and location-dependent workers. There are concerns about the erosion of organizational culture and the difficulty of onboarding new employees in fully remote settings. Additionally, the blurring of boundaries between work and home life has led to increased reports of burnout.

The most forward-thinking companies are addressing these challenges head-on. They're implementing "right to disconnect" policies, creating virtual social spaces, and reimagining career progression in a distributed environment. The future of work will likely involve greater flexibility but also more intentionality in how we design work experiences. As we move forward, the organizations that thrive will be those that recognize that work is an activity, not a place, and design their policies accordingly.

While blockchain technology gained fame through cryptocurrencies like Bitcoin and Ethereum, its potential applications extend far beyond digital money. At its core, blockchain is a distributed ledger technology that enables secure, transparent, and tamper-resistant record-keeping. These characteristics make it valuable for numerous applications across industries, from supply chain management to digital identity verification.

One of the most promising non-cryptocurrency applications of blockchain is in supply chain management. By creating an immutable record of a product's journey from raw materials to end consumer, blockchain can provide unprecedented transparency. This capability is particularly valuable for industries where provenance matters, such as pharmaceuticals, luxury goods, and food products. Consumers can verify authenticity, while companies can quickly identify and address issues in their supply chains.

Digital identity represents another area where blockchain technology shows great promise. Traditional identity systems are fragmented, insecure, and often exclude marginalized populations. Blockchain-based identity systems give individuals control over their personal data while providing a secure way to verify credentials. This approach could streamline processes like opening bank accounts, applying for loans, or accessing government services while reducing identity fraud.

The healthcare industry stands to benefit significantly from blockchain adoption. Patient records stored on a blockchain could be securely shared between providers while maintaining patient privacy through encryption and permissioned access. Clinical trial data recorded on a blockchain would be tamper-proof, increasing trust in research findings. Pharmaceutical supply chains could use blockchain to combat counterfeit drugs, a problem that causes hundreds of thousands of deaths annually.

Voting systems represent another compelling use case. Blockchain-based voting could increase accessibility while reducing fraud and ensuring the integrity of election results. Each vote would be recorded as a transaction on the blockchain, making it verifiable yet anonymous. While technical and social challenges remain, several countries and organizations are experimenting with blockchain voting for everything from shareholder meetings to municipal elections.

Intellectual property management is being transformed by blockchain technology. Artists, musicians, and writers can timestamp their creations on a blockchain, creating an immutable record of ownership. Smart contracts can automate royalty payments, ensuring creators are compensated fairly when their work is used. This application has particular significance in the digital age, where content is easily copied and shared without proper attribution or compensation.

Despite these promising applications, blockchain technology faces significant hurdles. Scalability remains a challenge, with many blockchain networks struggling to handle transaction volumes comparable to traditional systems. Energy consumption is another concern, though newer consensus mechanisms are addressing this issue. Regulatory uncertainty and interoperability between different blockchain platforms also present obstacles to widespread adoption.

As the technology matures, we're likely to see hybrid approaches that combine blockchain with traditional databases, using each where it's most appropriate. The future of blockchain beyond cryptocurrency isn't about replacing existing systems entirely but enhancing them where transparency, security, and decentralization add value. As developers address current limitations and businesses discover new use cases, blockchain's impact will extend far beyond the financial applications that first brought it to prominence.

As we approach 2025, edge computing is evolving from an emerging technology to a critical component of modern IT infrastructure. The exponential growth of IoT devices, the demands of real-time applications, and privacy concerns are driving computation and data storage closer to where data is generated. This shift from centralized cloud computing to distributed edge architectures is reshaping how we process information and deliver digital services.

One of the most significant trends for 2025 is the convergence of edge computing with 5G networks. The low latency and high bandwidth of 5G enable more sophisticated edge applications, from autonomous vehicles to augmented reality experiences. Telecom providers are transforming their infrastructure into distributed computing platforms, placing edge data centers at cell towers to serve nearby devices. This synergy between 5G and edge computing will unlock new use cases across industries.

AI at the edge is another major trend gaining momentum. Rather than sending data to the cloud for processing, AI models are increasingly deployed directly on edge devices. This approach reduces latency, conserves bandwidth, and enhances privacy by keeping sensitive data local. We're seeing specialized hardware designed specifically for edge AI workloads, with chips optimized for neural network inference rather than general-purpose computing.

The industrial sector is embracing edge computing to enable the next generation of smart factories. By processing data from sensors and cameras on-site, manufacturers can achieve real-time quality control, predictive maintenance, and optimized production flows. Edge computing allows factories to continue operating even when cloud connectivity is interrupted, a critical requirement for continuous manufacturing processes.

Security is evolving to address the unique challenges of edge environments. Traditional perimeter-based security models are inadequate for distributed edge architectures. Zero-trust approaches, where every access request is verified regardless of origin, are becoming standard. Hardware-based security features, such as trusted platform modules (TPMs) and secure enclaves, are being integrated into edge devices to protect against physical tampering.

Edge computing is also driving innovation in software development practices. Containerization and lightweight virtualization technologies allow applications to be packaged with their dependencies and deployed consistently across diverse edge environments. DevOps is evolving into "EdgeOps," with new tools and practices for managing distributed applications across thousands or even millions of edge nodes.

Sustainability is becoming a key consideration in edge computing deployments. While edge computing can reduce energy consumption by minimizing data transmission to distant cloud data centers, the proliferation of edge devices creates its own environmental impact. Manufacturers are responding with more energy-efficient hardware designs, and software solutions are optimizing workloads to minimize power consumption without compromising performance.

Looking ahead to 2025 and beyond, we can expect edge computing to become increasingly autonomous. Self-managing edge networks that can configure, heal, and optimize themselves will reduce the operational burden on IT teams. Federated learning approaches will enable edge devices to collaboratively improve AI models without centralizing training data. As these trends converge, edge computing will become an invisible yet essential layer of our digital infrastructure, powering everything from smart cities to personalized healthcare.

The integration of artificial intelligence into cybersecurity represents a double-edged sword. On one hand, AI-powered security systems can detect threats with unprecedented speed and accuracy. On the other, cybercriminals are leveraging the same technology to create more sophisticated attacks. This evolving landscape requires a fundamental rethinking of how we approach digital security in an AI-driven world.

AI-enhanced security systems excel at pattern recognition, allowing them to identify anomalies that might indicate a breach. Machine learning algorithms can analyze network traffic in real-time, flagging suspicious activities that would be impossible for human analysts to detect amidst the noise of normal operations. These systems continuously learn from new data, adapting to emerging threats more quickly than traditional signature-based approaches.

However, the same capabilities that make AI valuable for defenders also empower attackers. AI can be used to automate attacks, creating malware that evolves to avoid detection. Phishing attempts generated by AI are becoming increasingly sophisticated, with personalized messages that are nearly indistinguishable from legitimate communications. Deepfake technology poses another threat, enabling impersonation attacks that could compromise even multi-factor authentication systems.

The rise of AI in cybersecurity is also changing the nature of the skills required. Security professionals now need to understand machine learning concepts and data science principles alongside traditional security knowledge. This shift is creating a new category of "AI security specialists" who can both defend AI systems and use AI for defense. Organizations are investing in training programs to bridge this skills gap, recognizing that human expertise remains crucial even as automation increases.

Regulatory frameworks are struggling to keep pace with these technological developments. Data protection laws often don't adequately address the unique privacy concerns raised by AI systems that process vast amounts of personal information. There are also questions about liability when AI security systems fail—should responsibility lie with the organization deploying the system, the developers who created it, or the AI itself? These legal and ethical questions will need resolution as AI becomes more integrated into security infrastructure.

Looking ahead, we can expect to see more AI-versus-AI scenarios in cybersecurity, where defensive systems battle offensive ones in real-time. This arms race will likely lead to increasingly sophisticated security measures, but also more complex attacks. The organizations that succeed will be those that adopt a holistic approach, combining AI tools with human expertise, robust processes, and a security-first culture. As AI continues to evolve, our approach to cybersecurity must evolve with it, recognizing that in the age of AI, security is not a destination but a continuous journey of adaptation and improvement.

As climate change concerns intensify, the technology sector is increasingly focused on developing sustainable solutions that reduce environmental impact while maintaining performance. From energy-efficient data centers to circular economy principles in hardware manufacturing, sustainable technology is evolving from a niche concern to a central business imperative. These innovations are not just good for the planet—they're increasingly good for the bottom line.

One of the most significant areas of progress is in renewable energy integration for tech infrastructure. Major cloud providers are now powering their data centers with solar, wind, and other renewable sources, with several committing to 100% renewable energy targets. Beyond simply purchasing renewable energy credits, companies are investing in on-site generation and storage solutions, creating more resilient and sustainable operations.

The concept of "green software" is gaining traction as developers consider the energy consumption of their code. Optimized algorithms, efficient data structures, and thoughtful architecture decisions can significantly reduce the computational resources required for applications. This approach extends to user experience design, where interfaces that minimize unnecessary animations and processing can contribute to energy savings at scale.

Hardware sustainability is another critical frontier. Manufacturers are designing devices with repairability and upgradability in mind, challenging the tradition of planned obsolescence. Modular designs allow components to be replaced individually rather than requiring full device replacement. Companies are also increasing their use of recycled materials in production and establishing take-back programs to properly handle electronic waste.

The Internet of Things (IoT) is playing an unexpected role in sustainability efforts. Smart sensors can optimize energy usage in buildings, reduce water consumption in agriculture, and improve efficiency in manufacturing processes. These applications demonstrate how technology can not only reduce its own environmental footprint but also enable sustainability across other sectors of the economy.

Despite these advances, significant challenges remain. The increasing demand for computing power, driven by trends like AI and blockchain, threatens to outpace efficiency gains. There's also the problem of "rebound effects," where efficiency improvements lead to increased consumption rather than reduced environmental impact. Addressing these challenges will require both technological innovation and changes in consumer behavior and business models.

The future of sustainable technology lies in systems thinking—recognizing that solutions must consider the entire lifecycle of products and services. This approach includes designing for disassembly, implementing circular economy principles, and considering the indirect environmental impacts of technology decisions. As sustainability becomes a competitive advantage rather than just a compliance issue, we can expect to see continued innovation in this space, with technology playing a crucial role in addressing some of our most pressing environmental challenges.

Low-code and no-code platforms are revolutionizing software development by enabling people with limited programming experience to create applications through visual interfaces and configuration rather than traditional coding. This democratization of development is addressing the chronic shortage of skilled programmers while accelerating digital transformation across industries. The rise of these platforms represents a fundamental shift in who can build software and how quickly it can be delivered.

The appeal of low-code platforms lies in their ability to dramatically reduce development time. What might take a team of developers weeks or months to build traditionally can often be accomplished in days with low-code tools. This acceleration is particularly valuable for businesses needing to respond quickly to changing market conditions or internal requirements. The visual development environment also makes it easier for stakeholders to provide feedback throughout the process, reducing miscommunication and rework.

Beyond speed, low-code platforms are expanding the pool of potential application creators. Business analysts, subject matter experts, and other non-technical staff can now build solutions tailored to their specific needs without waiting for IT department resources. This "citizen development" movement is empowering those closest to business problems to create their own solutions, potentially leading to more relevant and effective applications.

However, the rise of low-code platforms doesn't mean the end of traditional software development. Professional developers are finding new roles as platform administrators, integration specialists, and creators of reusable components for low-code environments. The most effective implementations often combine low-code for rapid prototyping and simpler applications with traditional coding for complex, performance-critical systems.

As low-code platforms mature, they're expanding beyond basic form-building to encompass increasingly sophisticated capabilities. Many platforms now offer AI integration, mobile app development, and complex workflow automation. This expansion is blurring the lines between low-code and traditional development, creating a spectrum of tools suitable for different use cases and skill levels.

The future of low-code platforms likely involves greater integration with traditional development workflows. We're already seeing tools that can generate clean, maintainable code from visual designs, allowing professional developers to extend and customize applications created by citizen developers. This hybrid approach combines the speed of low-code with the flexibility of traditional coding, potentially offering the best of both worlds.

As low-code platforms continue to evolve, they'll play an increasingly important role in digital transformation efforts. The ability to quickly develop and iterate on applications allows organizations to experiment more freely and adapt more rapidly. While low-code won't replace traditional development entirely, it's becoming an essential tool in the modern software development toolkit, enabling organizations to do more with limited resources while bridging the gap between business needs and technical implementation.

The deployment of 5G networks is set to revolutionize the Internet of Things (IoT) by addressing key limitations of previous cellular technologies. With significantly higher speeds, lower latency, and greater device density support, 5G enables IoT applications that were previously impractical or impossible. This synergy between 5G and IoT is creating new possibilities across industries from manufacturing to healthcare.

One of the most significant impacts of 5G on IoT is the enablement of real-time applications. The ultra-low latency of 5G networks—potentially as low as 1 millisecond—allows for near-instantaneous communication between devices. This capability is crucial for applications like autonomous vehicles, remote surgery, and industrial automation where split-second decisions can have significant consequences. Previous networks simply couldn't provide the responsiveness required for these use cases.

The increased bandwidth of 5G also transforms what's possible with IoT devices. High-definition video from security cameras, detailed sensor data from industrial equipment, and rich multimedia from augmented reality applications can now be transmitted without the bottlenecks that plagued earlier networks. This bandwidth improvement enables more data-intensive applications and supports higher-quality experiences for end users.

5G's ability to support massive device connectivity—up to one million devices per square kilometer—addresses one of the fundamental challenges of large-scale IoT deployments. Smart cities, with their networks of sensors, cameras, and connected infrastructure, can now be implemented without overwhelming network capacity. This density support also enables more granular monitoring and control in environments like factories and agricultural settings.

Network slicing, a unique feature of 5G, allows operators to create virtual networks tailored to specific IoT applications. A smart grid might have a slice optimized for reliability, while a entertainment venue might have a slice optimized for bandwidth. This customization ensures that diverse IoT applications receive the network characteristics they need without compromising each other's performance.

Despite these advantages, the rollout of 5G for IoT faces challenges. The shorter range of higher-frequency 5G bands requires more infrastructure investment, particularly in rural areas. There are also concerns about security, as the increased connectivity expands the potential attack surface for malicious actors. Addressing these challenges will be crucial for realizing the full potential of 5G-enabled IoT.

Looking ahead, the combination of 5G and IoT will likely drive innovation in edge computing, as processing is distributed to handle the massive data flows generated by connected devices. We'll also see new business models emerge around IoT services that leverage 5G capabilities. As the technology matures and becomes more widely available, 5G-enabled IoT will transform not just how devices connect, but how entire industries operate, creating smarter, more efficient, and more responsive systems across society.

In an era of unprecedented data collection and analysis, privacy has become one of the most pressing concerns for individuals, businesses, and regulators. The digital age has created a paradox: while technology enables incredible convenience and personalization, it also poses significant risks to personal privacy. Navigating this landscape requires a careful balance between innovation and protection, with new approaches emerging to address these complex challenges.

The regulatory environment for data privacy has evolved significantly in recent years, with laws like GDPR in Europe and CCPA in California establishing new standards for data handling. These regulations reflect a growing recognition that personal data deserves protection similar to other valuable assets. Companies are now required to be more transparent about their data practices, obtain meaningful consent for data collection, and provide individuals with greater control over their information.

Technological solutions are emerging to help protect privacy in the digital age. Differential privacy techniques allow organizations to gather useful insights from data while preventing the identification of individuals. Homomorphic encryption enables computation on encrypted data without decryption, potentially allowing sensitive information to be processed while remaining private. These and other privacy-enhancing technologies are creating new possibilities for leveraging data while respecting individual rights.

The concept of "privacy by design" is gaining traction as organizations recognize that privacy cannot be effectively bolted on as an afterthought. This approach involves considering privacy at every stage of product development, from initial concept through deployment and eventual retirement. By building privacy into the foundation of systems and processes, organizations can create more trustworthy and compliant solutions while reducing the risk of costly privacy failures.

Consumer attitudes toward privacy are also shifting. After years of trading personal data for free services, many people are becoming more selective about what information they share and with whom. This changing mindset is driving demand for privacy-respecting alternatives to dominant platforms and services. Businesses that can demonstrate genuine commitment to privacy may find themselves with a competitive advantage in this evolving landscape.

However, significant challenges remain in achieving effective data privacy. The global nature of digital services creates jurisdictional complexities, with different regions adopting different privacy standards. The increasing sophistication of tracking technologies makes it difficult for individuals to understand and control how their data is being used. And there are inherent tensions between privacy and other values like security and innovation that must be carefully managed.

Looking ahead, the future of data privacy will likely involve continued evolution of both regulations and technologies. We may see the development of more granular consent mechanisms, better tools for individuals to manage their digital footprints, and new business models that don't rely on extensive data collection. Ultimately, achieving effective privacy in the digital age will require collaboration among technologists, policymakers, businesses, and individuals to create an ecosystem that respects privacy while enabling the benefits of our connected world.

As machine learning systems become increasingly integrated into decision-making processes that affect people's lives, ethical considerations have moved from academic discussions to practical imperatives. From hiring algorithms to predictive policing, ML systems can perpetuate or amplify existing biases, create new forms of discrimination, and operate with troubling opacity. Addressing these ethical challenges requires a multidisciplinary approach that considers technical, social, and philosophical dimensions.

One of the most pressing ethical concerns in machine learning is algorithmic bias. When training data reflects historical inequalities or underrepresented certain groups, the resulting models can perpetuate these patterns. For example, facial recognition systems trained primarily on light-skinned individuals may perform poorly on darker-skinned faces, leading to discriminatory outcomes. Addressing bias requires careful attention to data collection, preprocessing, and model evaluation throughout the development lifecycle.

Transparency and explainability represent another major ethical challenge. Many powerful ML models, particularly deep learning systems, operate as "black boxes" whose decision-making processes are difficult to interpret. This opacity becomes problematic when these systems make high-stakes decisions about credit, employment, or criminal justice. Developing techniques to make ML systems more interpretable without sacrificing performance is an active area of research with significant ethical implications.

The issue of accountability in ML systems raises complex questions about responsibility when things go wrong. If an autonomous vehicle causes an accident or a medical diagnosis system makes a fatal error, who is responsible—the developers, the users, the organization that deployed the system, or the algorithm itself? Establishing clear frameworks for accountability is essential for building trust in ML systems and ensuring appropriate recourse when they cause harm.

Privacy concerns take on new dimensions in the context of machine learning. The ability to infer sensitive information from seemingly innocuous data poses risks that may not be apparent to individuals providing that data. For example, shopping patterns might reveal health conditions, or social media activity might indicate political leanings. ML systems that make such inferences raise questions about consent and the appropriate boundaries of data usage.

Addressing these ethical challenges requires more than technical solutions. It necessitates diverse teams that include ethicists, social scientists, and domain experts alongside technical specialists. It requires robust testing and validation processes that specifically look for ethical pitfalls. And it requires ongoing monitoring and adjustment of deployed systems to identify and address ethical issues that may emerge over time.

The future of ethical machine learning will likely involve the development of more sophisticated tools for detecting and mitigating bias, increased regulatory oversight, and the emergence of professional standards for ML practitioners. Perhaps most importantly, it will require a cultural shift within the technology industry toward prioritizing ethical considerations alongside technical performance. By taking these challenges seriously and addressing them proactively, we can work toward ML systems that are not just powerful and efficient, but also fair, transparent, and aligned with human values.